Leading hardware and software companies are accelerating the introduction of autonomous vehicles by collaborating to fast-track the development of a customizable and cost-effective autonomous driving system.

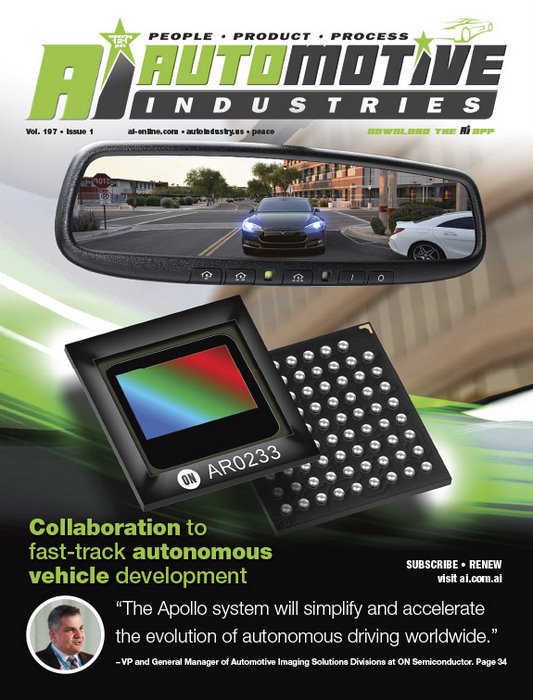

One of the newest companies to join Baidu’s Apollo Autonomous Driving Platform is ON Semiconductor, which adds a fully-qualified 3μm-based advanced CMOS image sensor to the system. It will serve as a foundation for the development of vision systems for autonomous driving, with the flexibility to move to future sensors at volume deployment. With high dynamic range (HDR), the sensor is able to provide crisp, clear single images and video in low and mixed light conditions.

In addition to the simplified and accelerated design, test and implementation of automotive camera applications, the relationship gives the Apollo ecosystem members priority access to ON Semiconductor’s technical support and product information. Apollo partners will enjoy early opportunities to the latest image sensing technologies from ON Semiconductor. “Given the importance of cameras as sensors for autonomous driving, we considered many aspects in our selection process for the Apollo platform including product roadmap, experience in automotive, and technical support for ecosystem platforms,” said Zhenyu Li, VP and General Manager of Intelligent Driving Group at Baidu in a press statement. “ON Semiconductor was the right choice for Apollo platform’s image sensor needs.”

Automotive Industries (AI) asked Ross Jatou, VP and General Manager of ON Semiconductor, to share some of the challenges for image sensor integration in autonomous driving solutions.

Jatou: Developing autonomous driving solutions is quite different from the typical human vision solutions we see in automotive today. Autonomous driving will demand higher levels of safety and security, particularly in the areas of functional safety and cybersecurity. This requires major investment to incorporate higher levels of safety and security into what will become the eyes and ears of the vehicle.

AI: How will the ON Semiconductor- Apollo collaboration help in offering solutions to automotive OEMs?

Jatou: The Apollo system will simplify and accelerate the evolution of autonomous driving worldwide. Developing an autonomous vehicle is much more complex than the integration of several computers. It would be simpler to develop a workstation or supercomputer than to develop an autonomous car. So, for every car company to do their own supercomputer would take a huge amount of effort. Autonomous systems are also very costly to develop. Apollo will not only provide access to data gathering and training technologies for system development, it will deliver a whole package available for adoption, enabling companies to more rapidly get into autonomous driving.

AI: What are the advantages of Baidu’s Apollo Autonomous Driving Platform?

Jatou: Apollo’s platform is based on partnerships with many technology leaders. This means it is more like an open system rather than a closed proprietary system. Baidu approached technology leaders such as ON Semiconductor to come together and collaboratively develop a world-class system, and this level of collaboration makes the platform more robust, safer and more secure. As it is introduced they will get feedback from adopters, which will be used to improve the system and roll out new capabilities. Any bugs that are found in the software and hardware get reported directly to Baidu, and fixes are rolled into the distribution so all of the platform users can benefit.

AI: Realistically, how close are we to level 4 or 5 in autonomous driving in the near-term future?

Jatou: We are much closer than people think. The social and economic benefits of autonomous driving are so large that they are measured in the trillions of dollars by various government and transportation agencies. Therefore, it is inevitable that autonomous vehicles will come sooner rather than later. The benefit is too great to let it just organically come to fruition. Vendors are already doing very advanced trials on public roads now in many cities around the world. By 2020 we will see level 4 and level 5 cars in limited volume, and it will increase from there. The issue with adoption, or the hurdle in people’s minds of why this is a monumental effort, is being broken down into smaller pieces. That is done is by geo-fencing areas where autonomous vehicles can operate, starting with regions that are well mapped, well tested and validated, and then expanding the area of coverage accordingly. That lends itself really well to commercial vehicles, to busses, and robot taxis. So, I think there will be adoption in those types of vehicles and from there it will grow into a mainstream technology, so by 2020 there will be level 4 cars available.

AI: What are some of the challenges of Image sensors for autonomous vehicles?

Jatou: Image sensors have to work well in variable conditions: bright daylight, low light and darkness. They have to be able to distinguish objects in challenging environments, like going into a tunnel on a bright day and seeing all the vehicles and lane markings inside that tunnel. In other words, they need high dynamic range, or HDR. In addition, they have to work in harsh environments such as extreme cold of northern cities in January as well as mid-day desert heat. And, automotive sensors must work reliably all the time. In a consumer device if an image sensor did not work you can always turn it off and on. It may be inconvenient, but it is not the end of the world. But for an image sensor on an autonomous car going through a tunnel turning off and on is not an option. The level of quality and reliability that autonomous driving demands is often measured in FIT, or “Failure in Time” and is measured in failures per billion hours. For autonomous driving, less than single-digit FIT will be necessary, meaning that 1 million cars driving for 1,000 hours each, will collectively see fewer than 10 severe errors.

AI: How important is it to ensure autonomous driving is both safe and green?

Jatou: Autonomous driving by definition will help the environment. Coupled with the electrification of cars it makes a very good combination for a better, greener environment. Part of the vision for autonomous driving is that every vehicle will have much higher utilization. Instead of being driven for an hour a day, the typical car will be driven for approximately 20 hours a day. So, instead of having many cars sitting empty in a parking lot all day long, they will be utilized elsewhere. Parking lots in large cities could be converted to green space or walking plazas.

AI: As an integral part of autonomous driving solutions how do you ensure that image sensors are safe?

Jatou: We have been focusing on functional safety in accordance to the ISO 26262 for several years, and we have learned over time that this needs to be addressed from the ground up. During development we inject faults into every node of our image sensors and look at the outcome, and we find failures never seen before. Understanding these failures means we have designed all our hardware cores to be able to detect faults and report them to the processor at every frame. Not only do we find failures, we find them very quickly, which means an error doesn’t propagate to create a dangerous situation. It is also part of our responsible engineering culture that we don’t throw these faults over the fence to the processor and have them addressed in software. While our functional safety features address random hardware and software errors, we have added cybersecurity feature to protect against hacking or human interference that would compromise safety. In our most recent sensor portfolio for autonomous driving we added a suite of cybersecurity protection measures including an entire processor that covers various aspects of encryption, authentication and any peculiar traffic between the processor and the sensor, to name a few.

AI: Will image sensors be enough or will they require other sensor types?

Jatou: In poor lighting conditions, including snow and fog, one needs to rely on other sensor types, such as radar. This is why ON Semiconductor acquired a radar division from IBM early last year. We are excited to offer our first generation of high resolution imaging radars in 2018, and believe it will revolutionize the radar industry.

![]()

The 2.6-megapixel (MP) AR0233 CMOS image sensor, is capable of running at 60 fps while simultaneously delivering ultra-high dynamic range and LED flicker mitigation along with all of the Hayabusa platform features.

Global hardware and software companies in AI vehicle partnership

Global hardware and software companies in AI vehicle partnership HERE Mobility is set to revolutionize the mobility ecosystem

HERE Mobility is set to revolutionize the mobility ecosystem The future (and present) of connectivity and autonomous driving

The future (and present) of connectivity and autonomous driving New development platform for autonomous vehicles

New development platform for autonomous vehicles Driver monitoring systems hold key to improved safety

Driver monitoring systems hold key to improved safety