Automotive Industries interview with Eran Ofir, CEO, Imagry Autonomous Driving Software

In this interview with Automotive Industries, Eran Ofir, CEO of Imagry (www.imagry.co), discusses the distinctive features of Imagry’s autonomous driving software, highlighting its mapless approach and hardware agnosticism. Ofir begins by explaining the limitations of traditional HD maps, which many companies use for autonomous navigation. Imagry, however, adopts a different approach that mimics human driving by creating real-time, on-the-fly maps based on the vehicle’s immediate environment. This method eliminates the need for continuously updated HD maps, thereby avoiding the associated communication costs, network dependencies, and cyber vulnerabilities. It also enables location independence as the vehicle is not limited to any specific geography since data about objects and driving guidelines are collected in real-time.

Ofir emphasizes that Imagry’s software is hardware agnostic, providing OEMs and Tier-1 suppliers the flexibility to choose their preferred components. This adaptability allows for cost-effective and efficient integration across various vehicle models, facilitating rapid deployment of SAE L3/L4 autonomous solutions. Imagry’s AI-based system uses real-time vision-based perception and supervised learning to support autonomy in both passenger cars and buses. By focusing on a robust AI-driven framework from the start, Imagry ensures its software can handle the complexities of SAE L3/L4 autonomy without attempting to rely on a patchwork of L2 (ADAS) solutions.

The interview delves into the technical aspects of Imagry’s software, which employs a two-phase approach of perception and motion planning. Multiple cameras capture real-time images, and deep convolutional neural networks (DCNN) process these images to create a detailed, dynamic map of the surroundings. This data is then used for motion planning, where the AI mimics human decision-making to generate control instructions for the vehicle.

Ofir also highlights the advantages of Imagry’s distributed neural network architecture over traditional rule-based systems. This modular approach allows for more efficient learning and adaptation to new driving scenarios, enhancing the system’s ability to respond to unforeseen situations. The continuous data collection from Imagry’s fleet of autonomous test vehicles, operating simultaneously in various countries, ensures that the system’s capabilities are constantly improving through supervised learning and human annotation.

Safety is a paramount concern for Imagry, and Ofir outlines how their system incorporates real-time perception and strict adherence to traffic rules to ensure safe operation. Unlike unsupervised systems that might mimic risky human behaviors, Imagry’s AI is designed to prioritize safety, akin to a skilled human driver.

Imagry’s approach to autonomous driving stands out due to its rejection of HD maps in favor of real-time perception, its hardware-agnostic design, and its location independence. This results in a more adaptable, cost-effective, and safer autonomous driving solution suitable for various environments and vehicle types.

Automotive Industries interview with Eran Ofir, CEO, Imagry Autonomous Driving Software

Automotive Industries: Hi Eran, can you elaborate on how Imagry’s autonomous driving software stands out in the market, particularly regarding its mapless capabilities and hardware agnosticism?

Ofir: When it comes to the development of autonomous driving technology, the role of HD (High-Definition) maps has been central to the discussion for years. Many companies have invested significant resources in creating and maintaining these expensive HD maps, believing they are a must component of the self-driving puzzle. However, at Imagry we challenge the notion that HD maps are the right solution for enabling vehicles to drive autonomously. Much like a human driver, the Imagry-enabled vehicle is not limited to known (“mapped”) roads. Instead, it navigates unfamiliar areas based on real-time perception of the environment, creating an “on the fly” map as the vehicle drives. Only Tesla and Imagry are known for this mapless, “location-independent” approach. Furthermore, self-driving vehicles need to be as self-sufficient as possible to avoid the hefty communications costs, network failures, and cyber vulnerability inherent in keeping HD maps continuously updated in the vehicle. With the Imagry system, all data required to perform safe and accurate motion planning, based on real-time inputs, is contained within the computing platform located inside the vehicle. This data can be updated periodically “over the air” to benefit from the Imagry method of supervised learning (training of the neural networks, which are the heart of Imagry’s AI-based system). It is our belief that the future of autonomous driving won’t be with HD maps, but rather will be achieved through adaptable and dynamic AI architecture capable of navigating existing road infrastructure.

Unlike most other autonomous driving software solutions, Imagry’s system is hardware agnostic, allowing OEMs and Tier-1 suppliers the flexibility to choose the components – cameras, computing platform, controllers, sensors, etc. – that best suit their needs, i.e., for different vehicles and models. This enables OEMs and Tier-1s to select the most cost-effective components for the various vehicles and models. Furthermore, flexible hardware requirements translate into lean and efficient engineering, easy integration into various self-driving vehicles, and rapid SAE L3/L4 solutions deployment.

Automotive Industries: Could you explain how Imagry’s AI-based autonomous driving software supports SAE L3/L4 autonomy in both passenger cars and buses?

Ofir: Unlike other players in the field, who believe that by patching together various ADAS functions (e.g., cruise control, lane keeping assist, automatic braking, etc.), they will achieve SAE L3/L4 autonomy, we took the opposite approach. We developed from the get-go software that would support SAE L4 autonomy, where our SAE L3/L4 products roadmap was based on two restrictive factors: computing power and regulation. As Tesla do, we use AI-based perception (using visual sensors – i.e., cameras) to identify objects surrounding the vehicle at the current moment. Then, we plan the vehicle’s motion based on the route suggested as a result of the supervised learning training process during product development.

The same software modules, perception and motion planning, as well as a similar system architecture and cameras’ configuration, are used in both passenger vehicles and buses.

Automotive Industries: How does Imagry’s technology utilize real-time vision-based perception and imitation-learning AI to create a driving decision-making network based on the vehicle’s current surroundings?

Ofir: We use a two-phased approach: Perception and Motion Planning. In the first, multiple cameras positioned around the vehicle collect real-time images of the surrounding area. Then, using proprietary IP tools for image collection and processing, various deep convolutional neural networks (DCNN) are employed, each responsible for identifying and classifying a different type of object (traffic lights, traffic signs, parked/moving vehicle, pedestrians, road marking, lanes, etc.). The result is a real-time, 360°, 3D, HD-equivalent map of the surrounding area up to a distance of 300 meters in front of the vehicle. Then, the generated map is passed to the Motion Planning stage, which includes a DCNN trained by supervised learning techniques to mimic the human driver’s decision-making process in our brains’ cortex. This stage produces control instructions for the autonomous vehicle as to how to react under the current circumstances: brake, change path, turn, or accelerate.

Automotive Industries: In what ways does Imagry’s Motion Planning stack leverage supervised learning techniques to mimic human driving behavior and generate instructions for autonomous vehicles?

Ofir: As opposed to the classic veteran “rule-based” software development approach to motion planning, which struggles with adapting to new, unforeseen situations, Imagry’s neural network-based systems excel in adapting to new circumstances by interpolating between known situations, just like a human driver does. The Imagry system uses multiple small neural networks (a distributed architecture) versus a single large one. This modular approach, where different tasks are handled by separate networks, facilitates supervised learning as it simplifies the identification and classification of different driving scenarios.

This video shows how the Imagry solution navigates dynamic situations, such as roadwork and skateboarders.

Automotive Industries: How does Imagry ensure the accuracy of its motion planning and expand its database of annotated use cases, particularly through its fleet of autonomous test vehicles?

Ofir: Imagry vehicles have been driving autonomously on public roads since 2019, and are currently operating in the U.S., Germany, Japan, and Israel. The vehicles being used for development purposes continually collect data which is then processed by human annotators to improve the system’s capabilities. The more data that is collected, the more edge cases are encountered, and the more accurate the response of every subsequent vehicle that is operating using Imagry technology.

Automotive Industries: Could you elaborate on the safety measures embedded within Imagry’s AI-based autonomous driving solution and how they resemble those of a skilled human driver?

Ofir: Embedded safety measures are the direct result of the Imagry autonomous driving approach, which is comprised of real-time object perception and supervised learning. Information about current road and traffic conditions is always up-to-date, traffic rules (signals, signs, road markings, speed limits) are always taken into account, and the vehicle is always programmed to err on the side of safety. It’s as if there is a co-pilot physically present at all times, maintaining a safety envelope around the vehicle. For example, unlike the unsupervised approach, wherein the autonomous vehicle may perform a “rolling stop” at a stop sign (since the majority of drivers do so), in the Imagry system the vehicle mimics a skilled and law-abiding human driver.

Automotive Industries: Is the Imagry solution field-proven and commercially available?

Ofir: Yes, it is. In May of last year we signed a commercial agreement with Continental, the third largest Tier-1 supplier in the world. They are incorporating Imagry motion planning software in their autonomous driving platform. Furthermore, we have been awarded three different L4 autonomous bus projects to date (one in an operational zone, the other two on public roads in cities – see demo drive videos below), with more under consideration. Last but not least, we are ramping up activities in Japan: currently engaged in a proof-of-concept (POC) with a leading OEM on the streets of Tokyo, and proposing our L4 autonomous bus projects to alleviate the 20% shortage in professional bus drivers there.

This video shows Imagry autonomous bus projects

Automotive Industries: What sets Imagry apart from other autonomous driving software providers, especially in terms of its approach to achieving full autonomy without relying on HD maps?

Ofir: By going HD-mapless Imagry achieves the following advantages over HD-maps based autonomous driving technology:

1, Understanding the Road Infrastructure – Real-time (not static) representation; constant scanning provides up-to-date information on dynamic road conditions

2, Data Storage – No data storage is required, as no external information is necessary. This also complies with GDPR (General Data Protection Regulation)

3, Communication – No cloud-based connection is needed as the vehicle is self-sufficient, removing reliance on network coverage and exposure to cyber vulnerability

4, Localization and Perception – Eliminates reliance on GPS and compass for localization as real-time image recognition is used for safe and accurate motion planning

5, Cost and Resource Efficiency – Much more economical solution as the camera-based approach is inexpensive and scalable

6, Relevance for Different Environments – Adaptable to various terrains and conditions; suitable for both on-road and unstructured off-road applications

Conclusion

In this interview, Eran Ofir, CEO of Imagry, highlights how their autonomous driving software’s mapless approach and hardware agnosticism provide a versatile, cost-effective, and safer solution. By mimicking human driving and relying on real-time perception, Imagry’s system enables efficient integration across diverse vehicle models, enhancing the feasibility of deploying SAE L3/L4 autonomy.

More Stories

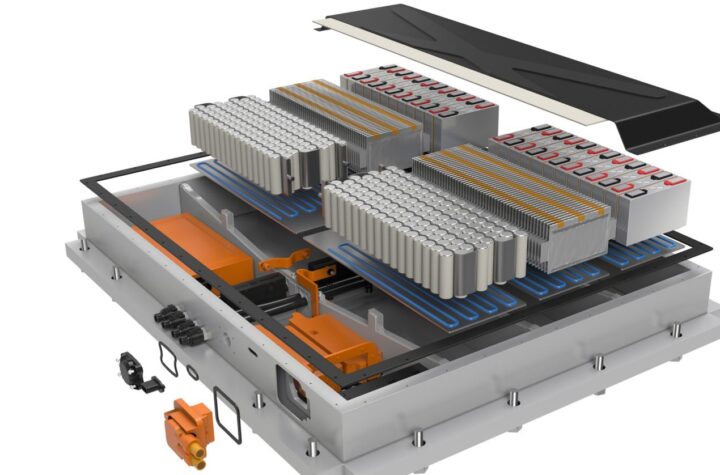

DuPont materials science advances next generation of EV batteries at The Battery Show

How a Truck Driver Can Avoid Mistakes That Lead to Truck Accidents

Car Crash Types Explained: From Rear-End to Head-On Collisions