Automobiles are costly to develop. There are investments in technology, product development and manufacturing and tooling.

There is cost and time to develop the supply chain and manufacturing line, and the risk that comes with large volume production runs. A mistake in design and manufacturing can lead to entrenched defects in the product with large warranty and rework costs or worse still, cause harm to the customers and citizenry at large.

The systems (the social system as well as the technical) can be so complex that we may not notice what might cause us some grief at some future time lest we take time to explore and critique during the development, doing so with some commensurate level of diligence and care. There are many tools in our portfolio of techniques, that help us avoid these traumas, and one of the most powerful of those tools provides a systematic critique of the features of the system, product and process.

Three variants and the team

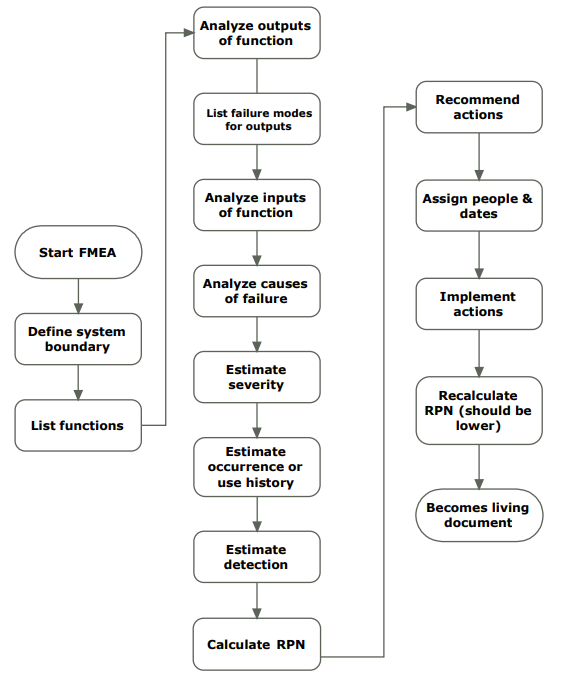

A significant technique to help identify these damaging potentialities is the Failure Mode Effects Analysis (FMEA). We sometimes hear people pronounce the FMEA letters like “fee-ma”, this is not the Federal Emergency Management Agency.

According to the Automotive Industry Action Group (AIAG) blue book, Potential Failure Mode Effects Analysis, the FMEA is described as a systemized group of activities intended to:

- Recognize and evaluate the potential failure of a product or a process and its effects.

- Identify actions which could eliminate or reduce the chance of the potential failure mode occurring.

- Document the process.

This approach is a structured critique of the system, product or process.

From a systems perspective, the focus is on the structure of the system to determine potential failure modes. The scope of the design, directs focus on the product and ways in which the design incarnation under review could fail and the consequences of such a failure. The process version, focuses on the failures in the manufacturing process that would lead to errant product being built and possible shipped to the customer.

No matter the focus area, SysFMEA (system focused), DFMEA (design focused), or PFMEA (manufacturing process focused), when done well, helps us uncover potential problems, organize these by most critical, and provides a base from which we can critique our thinking (tests and experiments) as well as determine appropriate actions for improvements.

Productivity

For this to be productive, the team will need to consist of a variety of associated perspectives, that is the there must be systems competencies for the system FMEA, design, testing, and field experiences for the design FMEA, and the associated design, industrial and manufacturing competencies for the process FMEA.

These are but suggestions of the types of expertise, each situation will create specific required team skills. There must be time allowed for the exploration, by these team members. The output is only as good as the time taken to understand the item under critique.

Priority

We should not get hung up on minutia of the estimating the level of potential trauma. The process requires that expertise make judgement calls on the severity, frequency of occurrence, and the ability to detect. The point is to provide a mechanism for prioritization of the theorized failures. These three values are calculated:

- RPN = Severity x Probability x Detection

Or alternatively:

- RPN = Severity + Probability + Detection

The AIAG manual provides scales for the assessment, but to some degree this evaluation is subjective unless in the presence of some historical data to support. The RPN is really the way of prioritizing those things we must absolutely address, distinguishing the most painful potentials from the trivial.

Aggressive critique

In addition to getting the correct competencies and time for the effort, the environment for the effort must be such that a serious critique of the item at hand is possible, and indeed welcomed. An organization that is not accepting of rigorous review and critique (overly political sensitivities) would make the effort largely impotent.

It must be acceptable to aggressively seek the potential areas of concern and make this known.

The prioritized results must be able to be discussed, and develop depending actions (testing, modeling, and other activities). We have a colleague that says the review should be like piranhas going after a raw steak.

The DFMEA example

By example, I will explain one of the approaches, that is the DFMEA is a specialized inspection of the product design through a cross functional tear down of the product function by function with the goal of discovering the failure mode of the product and what that failure would mean (symptom it would produce.

This same approach, with a different focus on the systems and the processes associated with the product. The DFMEA starts with a decomposition of functions of the product under review.

Each function of the product and its physical manifestation are considered. The functions of the product, is why the product is designed and ultimately purchased by the customer. We then consider each feature’s, failure modes, as well the physical manifestation of that failure on the customer.

Comparison

One of the things that makes the FMEA such a powerful tool (second to the talent in the review), is the constructive connection between failure mode and potential mechanisms that can create the failure mode.

This coupled with the follow-on testing and post action in general can be performed to confirm or more importantly refute out hypothesis about the failure. We should remember that it is easy to succumb to the confirmation bias, and the FMEA approach can help us over come this, but requiring a step beyond the exploration, and that is:

Testing

The prioritized list will be fodder for further experimentation and likely action to improve. Just because we surmise the results we did, does not make it actual. We will now need to devise tests to explore if what we think can go wrong, will in fact go wrong in the field or in use and have the consequences we theorize on the customer. Testing that confirms that we have a system, design or process malperformance, we will set about making changes to eliminate, reduce the occurrence, severity or improve the detectability of the item under consideration. The results of this testing will drive design changes should our theories of

- The severity of the failure mode

- The frequency of occurrence of the failure

- And the ability to detect the failure

The downside of a standard approach

In truth, being part of these critiques, in my experience, is either an interesting and productive learning opportunity, or as about as much fun as watching paint dry.

Like anything, the results depend upon preparation, and team engagement. A skilled team that believes the work is important (as it is) will likely result in an interesting engagement with demonstrated results.

There are downsides to a standardized, or formalized approach. From experience, a standard approach can fall into the check the box mentality, of little or no benefit but the illusion that something has happened.

An illusion that we have actually done all we can to assure the system, product or process quality, can lead to failures of unknown magnitudes in the field. The illusion is not at all something we want, though we may feel comfortable with the illusion, right up until the point of looming disaster.

In addition to the possibility of the effort descending to a largely checkbox mentality, the effort is influenced by past experiences, making evoking and evaluating of the possible theoretical failure modes difficult. This can be mitigated by ensuring diverse perspective as well as not overly restricting (poo pooing – outright dismissal of some failure mode arguments) the failures.

Waiting to find things when the system, product or process is believed to be the final iteration, is too late, and will costs us time and effort in building iterations. A continuous and structured critique of the system, product or process is an opportunity for team learning and product improvement early and is fundamental to delivering a quality product.

More Stories

Some Ways How Motorists End Up in Collisions at U-Turns

Maximise Margins with Proven PPF Tactics

Finding the Car Boot Release Button – Tips and Tricks